Author | Lucía Burbano

Through learning patterns based on public data on violent and property crimes,scientists from the University of Chicago have developed a new algorithm that can predict future crimes one week in advance with about 90% accuracy. This is where some questions arise, but one in particular, will artificial intelligence be the new justice tool?

What is predictive justice?

Despite sounding extremely modern, jurimetrics or predictive justice is a concept that first emerged in 1949 thanks to Lee Loevinger, an American jurist who proposed using statistics and mathematical probability as a response to certain legal issues. He summarized his idea in an article published that year by the Minnesota Law Review.

Obviously, algorithms and Artificial Intelligence (AI) did not exist seventy years ago, and today they can imitate cognitive processes and make decisions and resolve problems by analyzing millions of data. But the debate was already out there.

AI to predict crimes

Returning to the legal arena, Artificial Intelligence, on the one hand, reduces the time spent by Law professionals on preparing a case by analyzing millions of judicial decisions similar to those they are dealing with.

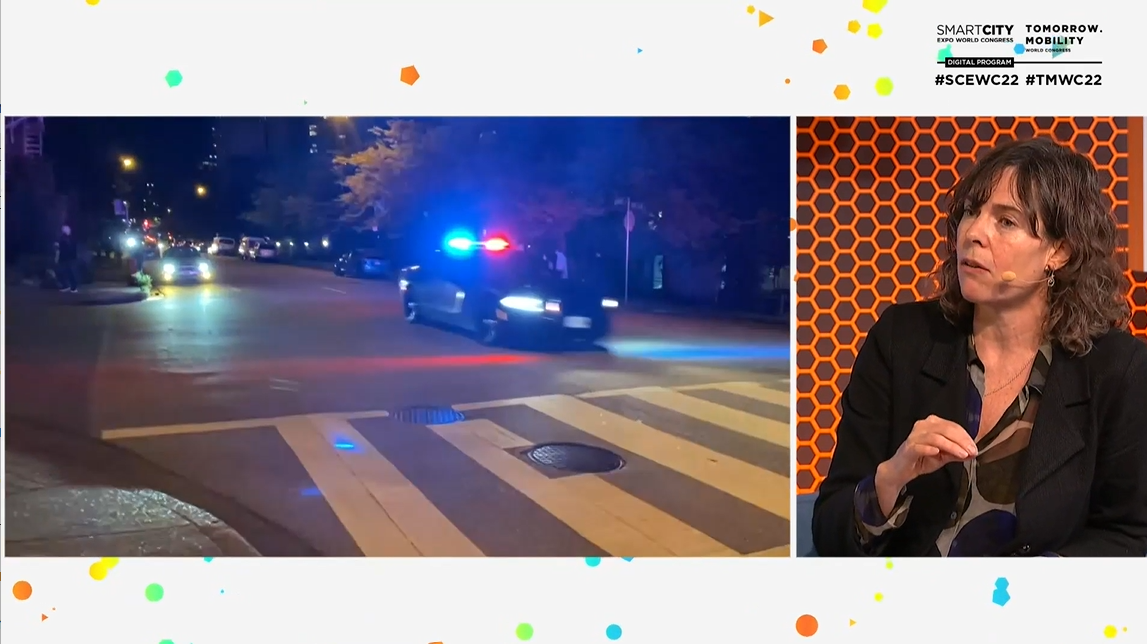

On the other hand, and focusing on predictive justice, the algorithms used by the study published by Victor Rotaru, Yi Huang, Timmy Li, James Evans and Ishanu Chattopadhyay, use historical data in the city of Chicago on two major categories of reported incidents: violent crimes (homicides and assaults) and property offences (robberies and theft or vehicle robberies).

They chose these data because they are more likely to be reported to the police in urban areas in which there is distrust and lack of cooperation between citizens and law enforcement agencies. Apart from Chicago, this predictive system has already been tested in the cities of Atlanta, Austin, Detroit, Los Angeles, Philadelphia, Portland and San Francisco.

China, Israel, United Kingdom… the predictive justice map

Also in the United States, the start-up Predpol was created jointly in 2012 as a research project by the Los Angeles police department and UCLA. Bill Bratton, the commissioner at the time, wanted to find a way of using the COMPSTAT data, the organizational management tool used by police, to facilitate predictive recommendations about how and where new crimes may be committed.

Since then, this predictive justice tool has been protecting one in 33 people in the United States, according to its website. In Pennsylvania, for example, robberies are said to have been reduced by 23% thanks to this predictive system.

Another country that uses predictive technologies in its judicial system is China. Since 2017, the artificial intelligence company Megvii has been working on an AI system that analyzes hours of video recordings, looking for unusual patterns that may be illegal in order to inform the police.

And there are more examples. The Eurocop program, developed by the University of Castellón in Spain, is creating a predictive map of specific places and times in a city where a crime may be committed.

**Cambridge University **has the predictive intelligence project Hart; Italy, XLAW; Germany, Precobs; Japan, Vaak and Israel, Cortica, which is also used in India.

Discrepancies regarding the use of AI in justice

Not everyone agrees with the use of AI in police departments. The United Kingdom’s Liberty Human Rights expressed it disagreement alleging that the use of this programs is "discriminatory" and "dangerous".

"Our research has revealed that at least 14 police forces in the United Kingdom have used or intend to use discriminatory software algorithms to predict where offences will be committed and by whom. The predictive programs aren’t neutral. They are trained by people and rely on existing police data, and so they reflect patterns of discrimination and further embed them into police practice.", they argue.

Furthermore, they warn that the public is given "very little information" about how predictive algorithms reach their decisions — and even the police don’t understand how they do it. "This lack of transparency and understanding means these programs can’t be properly scrutinized".

Lastly, they are calling on police "to end their use of these dangerous and discriminatory programs".

The debate is guaranteed.

Photographs | Unsplash/Possessed Photography, Unsplash/Tingey Injury Law Firm