Author | Jaime Ramos

Can a machine think? This simple question hides a major scientific, historical and philosophical journey. Giving an exact answer is not devoid of a defining utopian and transformative passage of the human being through the Universe.

Although the history of AI is extensive and prolific, it is in recent years that this technology has become more prominent in society. This shift is largely due to the popularity of numerous tools designed to automate tasks that are repetitive or large-scale. For example, managing the millions of data generated by cities.

The recent democratization of AI also raises concerns about jobs and the future of many existing professions. Here, we will take a brief look at the history of AI, as well as its present and future.

What is artificial intelligence (AI)?

Artificial intelligence is defined by its purpose, which consists of machines being able to have their own intelligence. The Council of the European Union provides an accurate definition.

The European institution describes it as a young discipline of sixty years, “which is a set of sciences, theories and techniques (including mathematical logic, statistics, probabilities, computational neurobiology, computer science) that aims to imitate the cognitive abilities of a human being.”

Its developments are intimately linked to those of computing and have led computers to perform increasingly complex tasks. However, the European Council itself recognizes that there are conflicting opinions regarding the concept itself: on the current success to give an affirmative answer to our initial question (Can a machine think?); and, even, regarding how the question should be drafted.

Can a machine think like a human being? Or, on the other hand, is there such a thing as an artificial intelligence with its own identity? (a bit like the rather accurate title Do androids dream of electric sheep?)

The latter makes us recall the differences between artificial intelligence and the limitations of automated learning.

Who invented artificial intelligence and when?

Concentrating the major discoveries on the efforts of a single person entails a degree of satisfaction and ease of learning and, along the way, enjoyment of the achievement. The case of artificial intelligence is far removed from this pattern. It is almost part of the inheritance of human knowledge.

This is illustrated by the fact that one has to almost go back to the 4th century BC, to Aristotelian logic, to obtain evidence of the first in-depth representative model, capable of emulating the rational responses of the human mind. Even today, it is an extremely valid precedent for the future of artificial intelligence in terms of seeking certain doses of humanization in machines.

Since then, and over time, original conceptions of artificial intelligence were repeated and how to make it possible, which were embellished with achievements such as those of George Boole, who laid the foundations of computer arithmetic in 1854. However, it was not until the 1930s in the 20th century that the modern materialization of the question “Can a machine think?” could be found.

Alan Turing: the definitive machine

It was Alan Turing who, in 1936, presented his automatic machine to the world. Apart from being considered one of the first computers, its importance lies in that it determined the mathematical model of computing that guides the logic of any given algorithm. It ended up being integrated in what is known as the Church-Turing thesis and took computer sciences to another level.

Years later, in 1950, Turing developed the issue of proving the existence or non-existence of intelligence in a machine with the creation of his controversial test. The Turing test tries to detect to what point a machine can imitate the intelligence of a human, so the artificial factor can be or not be distinguished and verify whether our interlocutor is a machine.

The Dartmouth Conference: the founders of AI

The term artificial intelligence was coined in 1956, by John McCarthy, after the Dartmouth Conference. This scientific workshop planted the seeds of what would follow decades later. At the same time, Allen Newell, Cliff Shaw, and Herbert Simon presented their Logic Theorist, one of the first programs to show the behavior of human beings in resolving mathematical problems.

Just one year later, Frank Rosenblatt presented his perceptron as an artificial neural network. Marvin Minsky, also an active participant at the famous conference, would consolidate this model in 1969.

What is the objective of AI

If the initial objective of Artificial Intelligence was to achieve a machine capable of thinking, today, the milestone has been perfected towards the improvement of that intelligence. Accordingly, the seven basic pillars of AI outlined in Dartmouth still stand today:

- Automated computers.

- How a computer can be programmed to use a language.

- Development of neural networks.

- Theory of the Size of a Calculation.

- Self-learning.

- Abstraction in AI.

- Randomness and Creativity.

- In order to achieve goals, the MIT played (and continues to play) a fundamental role. There, McCarthy and Minsky created the first AI project, which would produce so many results decades later.

Current examples of artificial intelligence

That seed planted in the middle of last century has accelerated its growth in the last ten years. So much work is carried out today with artificial intelligence that there are even growing concerns regarding the resources it consumes.

The proliferation of advances made and the speed at which they occur, has led to it being considered the fourth industrial revolution.

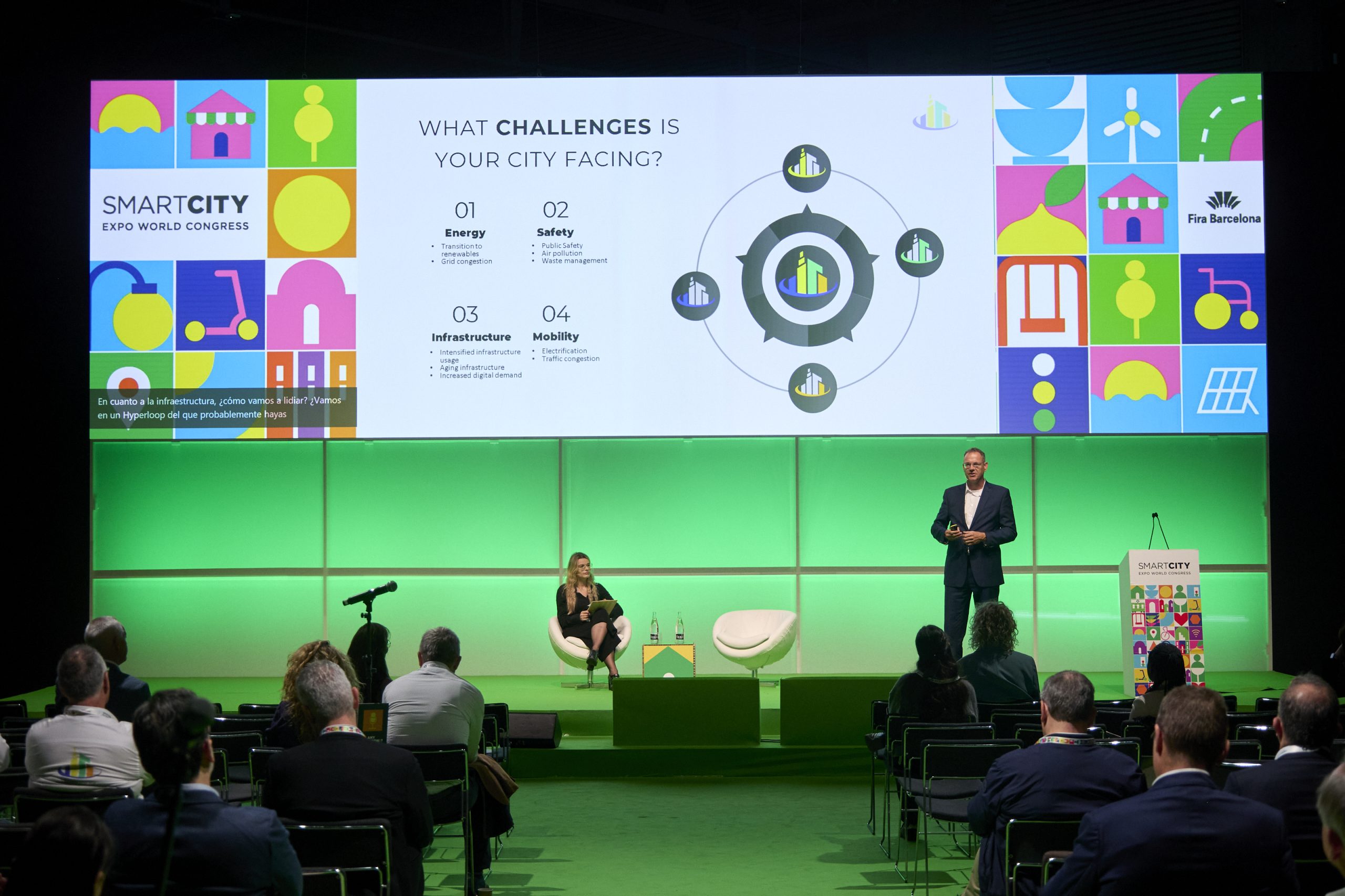

We can find dozens of examples in major cities around the globe: from algorithms that improve habitability, models to eradicate traffic jams to robots that carry out human roles and the arrival of autonomous vehicles.

Apart from mobility, AI has interesting applications in fields such as urban planning and architecture. In Buenos Aires, Argentina, the virtual assistant Boti demonstrated the capability to identify whether a user was infected with coronavirus. To verify this, users simply had to send an audio message of themselves coughing via WhatsApp. Boti would then respond with a diagnosis, achieving an accuracy rate of 88%.

Or in Beijing, China, where the Beijing Citizen Social Service Card has been introduced. This virtual card integrates various pieces of information, simplifying access to public services for residents.

Lastly, in Singapore, the epitome of a smart city, AI is employed to enhance waste management. Containers are equipped with sensors that provide real-time data on their capacity, significantly improving collection efficiency.

Chatbots, Deep Fakes, and other everyday forms of AI

AI is already highly prevalent in our everyday lives, marking an exponential leap that has occurred over a relatively short period of time. In 2022, the arrival of ChatGPT, a chatbot application developed by OpenAI, made headlines as the most advanced AI for holding conversations, asking questions, and drafting increasingly sophisticated texts. This AI continuously learns and improves over time, incorporating corrections and feedback from users.

Just as ChatGPT is an AI associated with language, Deep Fakes use AI to generate realistic false images from scratch. Their oral equivalent is Deep Voice, which mimics the human voice using advanced neural networks. This technology can convert text into speech or modify an existing voice, altering characteristics such as tone and speed.

Why was artificial intelligence created?

The most profound reason for the development of AI goes beyond how machines make our lives easier. One has to go back centuries to the field of philosophy and human knowledge, in which the primitive concept of intelligence beyond human intelligence, touched the realms of myth, religion and metaphysics. This is illustrated, for example, by the figures of the Platonic demiurge, Pygmalion’s Galatea, or the golem in Hebrew tradition.

Throughout the history of art and literature, we can find other paradigmatic examples of the human dream to become a creator and replicate, not just his mind, but also his heart, in the words of Baum’s tin man. In the 20th century, the science fiction genre has resorted on countless occasions to the issue and the ethical dilemma of whether a machine can think, and how and why.

Among the tons of fictional options that exist with the same theme, many find a universal answer in Fredric Brown’s miniscule story, paradoxically entitled Answer, which was praised by Isaac Asimov more extensively in his work The last question.

Both authors drastically relate the sense of AI to the need (or not) for it to be directed via human ethics. Of course, the dilemma has a technical perspective that is very in vogue. Stuart Russell and Peter Norvig, authors of Artificial Intelligence: A Modern Approach tackle it by differentiating between intelligences as follows:

• Systems that think like humans.• Systems that act like humans.• Systems that think rationally.• Systems that act rationally.

In a century in which we know that yes, a machine can think, this question should not be underestimated: why should machines think? Its controversial answer is related to the human skepticism withregard to claiming or rejecting the concept that thinking is for humans only.

Europe, the first to establish a law regulating AI

Given the numerous debates surrounding AI, the EU initiated discussions in 2021 on the necessity of establishing laws to regulate its various characteristics and uses. The goal is to ensure AI systems are “safe, legal, and trustworthy, while also respecting fundamental rights”.

After various stages of legal processes, the EU finally became the first territory in the world to approve a legal text on artificial intelligence in 2024. This legislation will come into effect in 2026.

Some of the main points of the AI act are:

- Classified based on risk: The main part of the text addresses high-risk AI systems, which are subject to regulation. For example, an AI system designed to manipulate human behavior.

- A smaller section covers limited risks. For example, developers must ensure that end users are aware they are interacting with AI, such as chatbots and deepfakes.

- They must ensure that the artificial intelligence systems used and introduced in the European market are safe and respect citizens’ rights.

- Investment and innovation in the field of AI in Europe is also encouraged.

Some countries working in this direction include Canada and China. Cities such as New York have also established regulations related to AI. In this case, Local Law 144 prohibits companies from using automated employment decision tools unless the tool has been subject to a bias audit.

Images | Bank of England, null0 (CC), Daimler, iStock/ipopba, Possessed Photography, Conny Schneider